Introduction

Ceph, an open-source software-defined, scalable, fault tolerant and resilient storage system, trusted by enterprises now-a-days. It is a distributed storage system that manages large amounts of data and provides clients with high-performance storage services. Ceph storage is a popular choice of many organizations around the world. In this blog, we ‘ll give an overview of Ceph storage, go over high level architecture and components of Ceph. Also explain why businesses should choose as data storage.

Ceph Storage Architecture

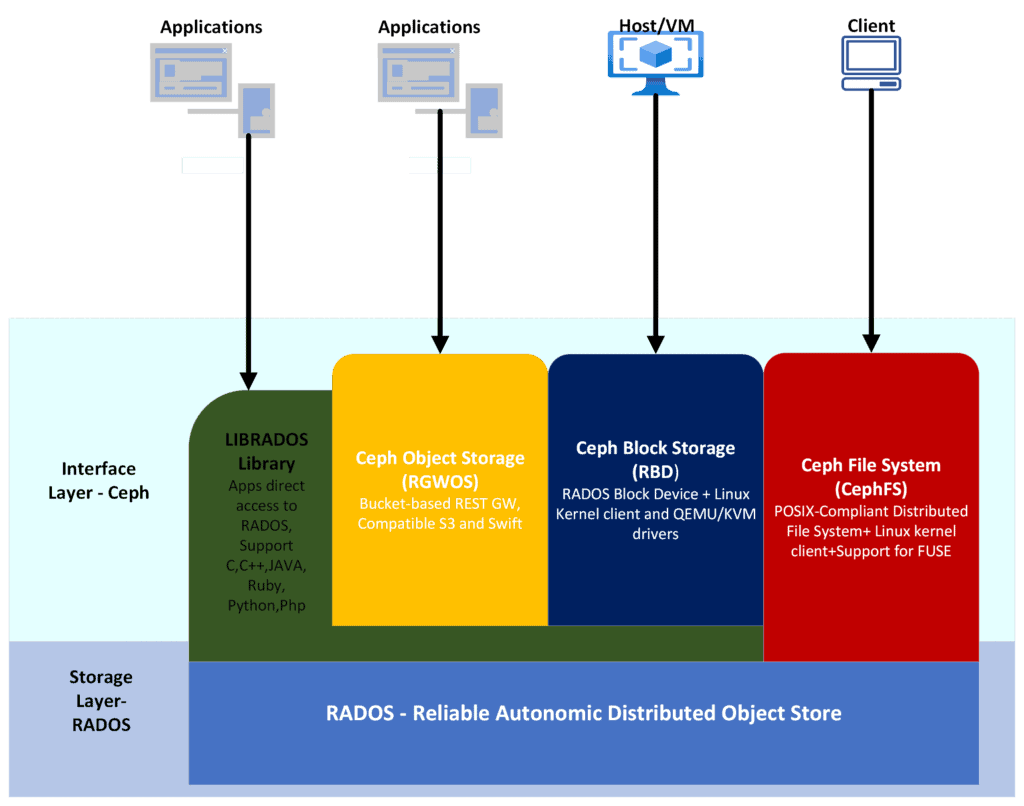

The Ceph storage components deployed to form a Ceph Storage Cluster. There are two distinct architectural layers of Cpeh Storage Cluster.

- Storage Layer – RADOS

- Interface Layer – Ceph

Components on each layer collaborate with one another to form a decentralized and distributed Ceph storage Cluster which is the foundation. Ceph Storage Cluster deployed based upon RADOS consists of several Ceph daemons or piece of software. Below all the necessary components and terminologies explained as well as most importantly CRUSH algorithem which is responsible for retrieval and storage of data in Ceph Storage Cluster.

Storage Layer – RADOS

Reliable Autonomic Distributed Object Store is what RADOS stands for. RADOS is backend/lower layer of Ceph Storage Cluster providing data object store. Both Ceph Object Storage and Ceph Block Storage rely on this storage system as their primary one. The RADOS system is a distributed object store that distributes the storage of objects across the various nodes that make up the cluster. It has the capabilities of replicating data, automatically failing over to a backup, and self-healing. RADOS is a storage system that is highly scalable and has the capacity to store petabytes of data. RADOS comprise of below three major components alongwith Ceph Manager.

Ceph Object Storage Daemon (Ceph-OSD)

Object Storage Daemon(OSD) is the piece of software that provides object storage services runnig on each storage node that is part of cluster. Each OSD is accountable for the storing of objects on a local disk and the management of those objects. OSDs are able to maintain data consistency and redundancy through communication with one another.

Ceph Monitors(Ceph-Mon)

The Monitor(Mon) is a software that checks the status of the Ceph cluster while it is running on one or more of the nodes in the cluster. MONs are responsible for monitoring and managing OSDs, CephFS metadata servers, and other cluster components. They are also responsible for handling changes in configuration and failover operations.

Ceph Metadata Servers(Ceph-MDS)

Metadata Server(MDS) is the component of CephFS that is responsible for providing metadata services. MDSs hold the metadata of file systems, which includes things like file names, permissions, and directory layout. In order to successfully deliver file system services, MDSs collaborate closely with OSDs.

Ceph Manger(Ceph-Mgr)

It is a component of the Ceph storage system that offers a centralized management and monitoring interface for Ceph clusters. Ceph manager manages and monittors. It is in charge of managing and monitoring the overall health and status of a Ceph cluster, as well as coordinating other administrative duties such as configuration, deployment, and scaling.

Interface Layer – Ceph

The Ceph file system, the Ceph object storage, and the Ceph block storage make up the three primary storage interfaces of Ceph. A unified storage system that is both fault-tolerant and scalable can be produced by the collaboration of these working together. Distributed object storage stores data as objects in Ceph. This differs from file system-based storage architectures. In addition, LIBRADOS Ceph’s software libraries give users direct access to the reliable autonomic distributed object store (RADOS) object-based storage system, which underpins features like RGW Object, RADOS Block Device and the Ceph Filesystem.

Ceph Block Storage(RBD)

Ceph Block Storage offers a distributed block storage system that can be utilized as a storage backend for virtual machines, databases, and other applications that call for block storage. Block storage services are provided by Ceph Block Storage through the utilization of the RADOS Block Device (RBD) protocol. The RBD protocol is a thin provisioning system that enables the on-demand allocation of block devices. This capability is provided by the RBD. RBD block Storage supports iSCSI and RBD application programming interfaces, which enables applications to access the storage using block-level interfaces.

Ceph Object Storage(RGWOS)

Ceph Object Storage is a distributed storage system that operates based on an object-based storage model. Objects are saved in binary files, and a one-of-a-kind identifier is required in order to access them. To protect the integrity of the data and ensure that there is no loss of data in the event of a node failure. Ceph Storage saves replicated copies of each object is replicated across multiple nodes. Ceph Storage supports S3 and Swift application programming, which enables RESTful interfaces utilization by applications in order to gain storage access. Rados Gateway(RGW) is the name of native object storage gateway that Ceph storage possesses.

Ceph File System(CephFS)

The Ceph File System (CephFS) is a distributed file system that offers a POSIX-compliant file system interface. The Ceph File System (CephFS) provides a file system interface on top of the object storage layer, while at the same time it stores files in the Ceph Object Storage system as objects. CephFS supports the Network File System (NFS) and the Common Internet File System (CIFS), which are the protocols that enable applications to access storage using file-level interfaces.

LIBRADOS

Librados is the Ceph library you can integrate in your own apps to directly speak to a Ceph cluster using native protocols. Instead of using high-level protocols like Amazon S3, librados lets your application use Ceph’s native communication protocols to take advantage of its power, speed, and flexibility. Your program can read and write simple objects and perform complex actions, such as wrapping numerous activities in a transaction or running them asynchronously, using a wide range of methods. Librados supports Java, PHP, Python, C, and C++.

CRUSH

The CRUSH stands for Controllable Replicated Under Scalable Hash, Ceph cluster’s distributed data placement technique, CEPH CRUSH, stores data on many storage nodes. CRUSH stores data to maximize performance, availability, and scalability. Even in enormous clusters, the algorithm places data efficiently and cheaply.

A two-level hierarchical model distributes data across a cluster using the CRUSH algorithm. The cluster has independent failure domains at the first level. A rack or power domain is a failure domain, a set of storage nodes likely to fail. Each failure domain is separated into OSDs at the second level (Object Storage Devices). An OSD is a storage node with a set capacity.

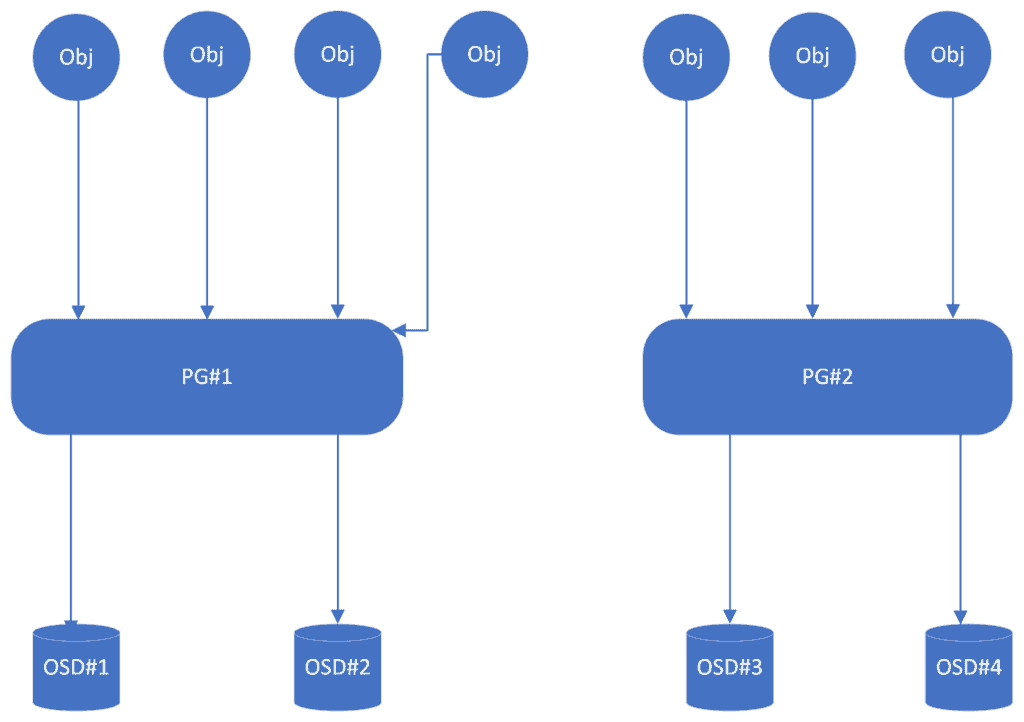

Placement Groups

In Ceph, data is first divided into objects. Each object has an object ID. The CRUSH algorithm then converts the object ID to a series of OSDs based on the cluster’s status. The cluster’s current state and the hierarchical CRUSH map determine objects mapping. The CRUSH algorithm maps each object to a Placement Group (PG) number using a hash function. The PG number determines where the object will be stored. CRUSH’s hash function is intended to ensure that objects are distributed evenly across the cluster and are not all stored on the same set of storage nodes.

CRUSH Map

After determining the PG number, the CRUSH algorithm selects storage nodes for the object using a tree-based placement technique. CRUSH map is CRUSH’s tree structure and it resides on each cluster’s node. The CRUSH map describes the cluster’s storage node hierarchy and the weight allocated to each node. The weight assigned to each node determines data storage on that node.

The cluster’s topology is in the CRUSH map. CRUSH map defines the cluster’s failure domains, OSDs, and data replication rules. Every cluster OSD stores the CRUSH map, which the CRUSH algorithm uses to put data.

Ceph Replication

CRUSH’s data placement policies are adjustable. Administrators can choose how to replicate data throughout the cluster based on data availability, performance, and scalability. An administrator could decide that data replication across different failure domains for high availability or placed on OSDs with the maximum bandwidth for performance.

The CRUSH algorithm ensures that each object is saved on many storage nodes to offer fault tolerance. The number of copies of an object saved on the cluster is governed by a configurable replication factor. The CRUSH method ensures that copies of the object stored object on separate storage nodes to reduce the risk of data loss due to hardware failures.

Why Choose Ceph?

CEPH storage offers a number of benefits for enterprises that are looking for a scalable, reliable, and flexible storage solution. Some of the key benefits of using CEPH storage include:

Open-Source, Large Community Support

Ceph is a popular open-source data storage system and capable of storing petabytes of data across. Sage Weil at the University of California, Santa Cruz created it in 2012, and the first stable version, dubbed “Argonaut”. It is freely available to consumers and the also large developer community to add and keep improving Ceph. Quincy 17.2.0 is the latest release on April 19, 2022. Ceph web resource is https://ceph.io/en/.

Scalability

Ceph storage is scalable. It scales smoothly from a few nodes to thousands. It’s ideal for businesses that require scalable storage.Along side of scalability it is also high-available, and fault-tolerant. This open-source software provides organizations a leverage to source flexible, dependable, and cost-effective block, object, and file storage from a unified plateform.

Flexibility

It is possible to configure Ceph storage to work with a wide variety of storage devices, including hard drives, solid-state drives, and even tape drives. Ceph storage is highly flexible and moreover, has a high degree of adaptability. In addition to this, it is compatible with a wide range of storage interfaces, such as block, object, and file storage.

Cost-Effective

The Ceph storage system is made up of hardware that is widely available and cheap, so it can be used by businesses of all sizes. It is great for large organizations that need petabyte-scale storage because it can be grown. In Ceph storage, the security and accessibility of data are ensured by data redundancy, durability, and availability. Ceph storage can grow in width by adding nodes to its cluster. Each cluster node has storage and processing power which enable storage to spread load across nodes. Ceph storage is flexible because it can be with hard drives, solid-state drives, and tape drives. Ceph storage is flexible in terms of usage in diverse environments.

Modular and Adaptable

The Ceph storage system is both modular and adaptable. It is possible to install it locally or in the cloud, and it is compatible with a wide variety of software programs as well as online services. The Ceph storage system is flexible and able to accommodate a variety of data formats, including structured, semi-structured, and unstructured data.

Efficient Storage Method

Ceph also utilizes an innovative method of data distribution and replication known as CRUSH, which ensures balanced distribution of data in efficient manner across the cluster. CRUSH, a Ceph-developed data distribution and replication method, balances and optimizes cluster data distribution. It provides scalable, fault-tolerant cloud storage.

Versatility

Ceph storage is capable of storing a wide variety of data types, including structured, semi-structured, and unstructured data. Additionally, it is able to integrate with a diverse array of software programs and online services.

Conclusion

In a nutshell, CEPH storage is a scalable, dependable, and highly flexible storage solution that is open-source and distributed. It offers these benefits to business organizations. Scalability, fault tolerance, and cost efficiency are just a few of the advantages that come with using CEPH storage. It is built to manage petabytes of data and can be quickly scaled to suit the requirements of a business even as it expands its operations. Data redundancy, data durability, and data availability are all provided by CEPH storage, guaranteeing that data is kept secure and can always be accessed when necessary.